- Feature availability

- How value stream analytics works

- View value stream analytics

- Value stream analytics metrics

- View lifecycle and DORA metrics

- View metrics for each development stage

- View tasks by type

- Create a value stream

- Edit a value stream

- Delete a value stream

- View number of days for a cycle to complete

- Access permissions for value stream analytics

- Value Stream Analytics GraphQL API

- Troubleshooting

Value stream analytics

Value stream analytics measures the time it takes to go from an idea to production.

A value stream is the entire work process that delivers value to customers. For example, the DevOps lifecycle is a value stream that starts with the “manage” stage and ends with the “protect” stage.

Use value stream analytics to identify:

- The amount of time it takes to go from an idea to production.

- The velocity of a given project.

- Bottlenecks in the development process.

- Long-running issues or merge requests.

- Factors that cause your software development lifecycle to slow down.

Value stream analytics helps businesses:

- Visualize their end-to-end DevSecOps workstreams.

- Identify and solve inefficiencies.

- Optimize their workstreams to deliver more value, faster.

Value stream analytics is available for projects and groups.

For a click-through demo, see the Value Stream Management product tour.

Feature availability

Value stream analytics offers different features at the project and group level for FOSS and licensed versions.

- On GitLab Free, value stream analytics does not aggregate data. It queries the database directly where the date range filter is applied to the creation date of issues and merge request. You can view value stream analytics with pre-defined default stages.

- On GitLab Premium, value stream analytics aggregates data and applies the date range filter on the end event. You can also create, edit, and delete value streams.

| Feature | Group level (licensed) | Project level (licensed) | Project level (FOSS) |

|---|---|---|---|

| Create custom value streams | Yes | Yes | no, only one value stream (default) is present with the default stages |

| Create custom stages | Yes | Yes | No |

| Filtering (for example, by author, label, milestone) | Yes | Yes | Yes |

| Stage time chart | Yes | Yes | No |

| Total time chart | Yes | Yes | No |

| Task by type chart | Yes | No | No |

| DORA Metrics | Yes | Yes | No |

| Cycle time and lead time summary (Lifecycle metrics) | Yes | Yes | No |

| New issues, commits, and deploys (Lifecycle metrics) | Yes, excluding commits | Yes | Yes |

| Uses aggregated backend | Yes | Yes | No |

| Date filter behavior | Filters items finished within the date range | Filters items by creation date. | Filters items by creation date. |

| Authorization | At least reporter | At least reporter | Can be public |

ProjectNamespace. For details about this consolidation initiative, see the Organization documentation.How value stream analytics works

Value stream analytics calculates the duration of every stage of your software development process.

Value stream analytics is made of three core objects:

- A value stream contains a value stream stage list.

- Each value stream stage list contains one or more stages.

- Each stage has two events: start and stop.

Value stream stages

A stage represents an event pair (start and end events) with additional metadata, such as the name of the stage. You can configure the stages in the pairing rules defined in the backend.

Value streams

Value streams are container objects for the stages. You can have multiple value streams per group, to focus on different aspects of the DevOps lifecycle.

Value stream stage events

- Merge request first reviewer assigned event introduced in GitLab 17.2. Reviewer assignment events in merge requests created or updated prior to GitLab 17.2 are not available for reporting.

Events are the smallest building blocks of the value stream analytics feature. A stage consists of a start event and an end event.

The following stage events are available:

- Issue closed

- Issue created

- Issue first added to board

- Issue first added to iteration

- Issue first assigned

- Issue first associated with milestone

- Issue first mentioned

- Issue label added

- Issue label removed

- MR closed

- MR merged

- MR created

- MR first commit time

- MR first assigned

- MR first reviewer assigned

- MR first deployed

- MR label added

- MR label removed

- MR last pipeline duration

These events play a key role in the duration calculation, which is calculated by the formula: duration = end event time - start event time.

To learn what start and end events can be paired, see Validating start and end events.

How value stream analytics aggregates data

- Enable filtering by stop date added in GitLab 15.0

Value stream analytics uses a backend process to collect and aggregate stage-level data, which ensures it can scale for large groups with a high number of issues and merge requests. Due to this process, there may be a slight delay between when an action is taken (for example, closing an issue) and when the data displays on the value stream analytics page.

It may take up to 10 minutes to process the data and display results. Data collection may take longer than 10 minutes in the following cases:

- If this is the first time you are viewing value stream analytics and have not yet created a value stream.

- If the group hierarchy has been re-arranged.

- If there have been bulk updates on issues and merge requests.

To view when the data was most recently updated, in the right corner next to Edit, hover over the Last updated badge.

How value stream analytics measures stages

Value stream analytics measures each stage from its start event to its end event. Only items that have reached their end event are included in the stage time calculation.

By default, blocked issues are not included in the life cycle overview.

However, you can use custom labels (for example workflow::blocked) to track them.

You can customize stages in value stream analytics based on pre-defined events. To help you with the configuration, GitLab provides a pre-defined list of stages that you can use as a template. For example, you can define a stage that starts when you add a label to an issue, and ends when you add another label.

The following table gives an overview of the pre-defined stages in value stream analytics.

| Stage | Measurement method |

|---|---|

| Issue | The median time between creating an issue and taking action to solve it, by either labeling it or adding it to a milestone, whichever comes first. The label is tracked only if it already has an issue board list created for it. |

| Plan | The median time between the action you took for the previous stage, and pushing the first commit to the branch. The first commit on the branch triggers the separation between Plan and Code. At least one of the commits in the branch must contain the related issue number (for example, #42). If none of the commits in the branch mention the related issue number, it is not considered in the measurement time of the stage.

|

| Code | The median time between pushing a first commit (previous stage) and creating a merge request (MR) related to that commit. The key to keep the process tracked is to include the issue closing pattern in the description of the merge request. For example, Closes #xxx, where xxx is the number of the issue related to this merge request. If the closing pattern is not present, then the calculation uses the creation time of the first commit in the merge request as the start time.

|

| Test | The median time to run the entire pipeline for that project. It’s related to the time GitLab CI/CD takes to run every job for the commits pushed to that merge request. It is basically the start->finish time for all pipelines. |

| Review | The median time taken to review a merge request that has a closing issue pattern, between its creation and until it’s merged. |

| Staging | The median time between merging a merge request that has a closing issue pattern until the very first deployment to a production environment. If there isn’t a production environment, this is not tracked. |

For information about how value stream analytics calculates each stage, see the Value stream analytics development guide.

Example workflow

This example shows a workflow through all seven stages in one day.

If a stage does not include a start and a stop time, its data is not included in the median time. In this example, milestones have been created and CI/CD for testing and setting environments is configured.

- 09:00: Create issue. Issue stage starts.

- 11:00: Add issue to a milestone (or backlog), start work on the issue, and create a branch locally. Issue stage stops and Plan stage starts.

- 12:00: Make the first commit.

- 12:30: Make the second commit to the branch that mentions the issue number. Plan stage stops and Code stage starts.

- 14:00: Push branch and create a merge request that contains the issue closing pattern. Code stage stops and Test and Review stages start.

- GitLab CI/CD takes 5 minutes to run scripts defined in the

.gitlab-ci.ymlfile. - 19:00: Merge the merge request. Review stage stops and Staging stage starts.

- 19:30: Deployment to the

productionenvironment finishes. Staging stops.

Value stream analytics records the following times for each stage:

- Issue: 09:00 to 11:00: 2 hrs

- Plan: 11:00 to 12:00: 1 hr

- Code: 12:00 to 14:00: 2 hrs

- Test: 5 minutes

- Review: 14:00 to 19:00: 5 hrs

- Staging: 19:00 to 19:30: 30 minutes

Keep in mind the following observations related to this example:

- This example demonstrates that it doesn’t matter if your first commit doesn’t mention the issue number, you can do this later in any commit on the branch you are working on.

- The Test stage is used in the calculation for the overall time of the cycle. It is included in the Review process, as every MR should be tested.

- This example illustrates only one cycle of the seven stages. The value stream analytics dashboard shows the median time for multiple cycles.

Cumulative label event duration

-

Introduced in GitLab 16.9 with flags named

enable_vsa_cumulative_label_duration_calculationandvsa_duration_from_db. Disabled by default. -

Enabled on GitLab.com and self-managed in GitLab 16.10. Feature flag

vsa_duration_from_dbremoved. - Feature flag

enable_vsa_cumulative_label_duration_calculationremoved in GitLab 17.0.

With this feature, value stream analytics measures the duration of repetitive events for label-based stages. You should configure label removal or addition events for both start and end events.

For example, a stage tracks when the in progress label is added and removed, with the following times:

- 9:00: label added.

- 10:00: label removed.

- 12:00: label added.

- 14:00 label removed.

With the original calculation method, the duration is five hours (from 9:00 to 14:00). With cumulative label event duration calculation enabled, the duration is three hours (9:00 to 10:00 and 12:00 to 14:00).

Reaggregate data after upgrade

On large self-managed GitLab instances, when you upgrade the GitLab version and especially if several minor versions are skipped, the background aggregation processes might last longer. This delay can result in outdated data on the Value Stream Analytics page. To speed up the aggregation process and avoid outdated data, in the rails console you can invoke the synchronous aggregation snippet for a given group:

group = Group.find(-1) # put your group id here

group_to_aggregate = group.root_ancestor

loop do

cursor = {}

context = Analytics::CycleAnalytics::AggregationContext.new(cursor: cursor)

service_response = Analytics::CycleAnalytics::DataLoaderService.new(group: group_to_aggregate, model: Issue, context: context).execute

if service_response.success? && service_response.payload[:reason] == :limit_reached

cursor = service_response.payload[:context].cursor

elsif service_response.success?

puts "finished"

break

else

puts "failed"

break

end

end

loop do

cursor = {}

context = Analytics::CycleAnalytics::AggregationContext.new(cursor: cursor)

service_response = Analytics::CycleAnalytics::DataLoaderService.new(group: group_to_aggregate, model: MergeRequest, context: context).execute

if service_response.success? && service_response.payload[:reason] == :limit_reached

cursor = service_response.payload[:context].cursor

elsif service_response.success?

puts "finished"

break

else

puts "failed"

break

end

end

How value stream analytics identifies the production environment

Value stream analytics identifies production environments by looking for project environments with a name matching any of these patterns:

-

prodorprod/* -

productionorproduction/*

These patterns are not case-sensitive.

You can change the name of a project environment in your GitLab CI/CD configuration.

View value stream analytics

- Predefined date ranges dropdown list introduced in GitLab 16.5 with a flag named

vsa_predefined_date_ranges. Disabled by default. - Predefined date ranges dropdown list enabled on self-managed and GitLab.com in GitLab 16.7.

- Predefined date ranges dropdown list generally available in GitLab 16.9. Feature flag

vsa_predefined_date_rangesremoved.

Prerequisites:

- You must have at least the Reporter role.

- You must create a custom value stream. Value stream analytics only shows custom value streams created for your group or project.

To view value stream analytics for your group or project:

- On the left sidebar, select Search or go to and find your project or group.

- Select Analyze > Value stream analytics.

- To view metrics for a particular stage, select a stage below the Filter results text box.

- Optional. Filter the results:

- Select the Filter results text box.

- Select a parameter.

- Select a value or enter text to refine the results.

-

To view metrics in a particular date range, from the dropdown list select a predefined date range or the Custom option. With the Custom option selected:

- In the From field, select a start date.

- In the To field, select an end date.

The charts and list display workflow items created during the date range.

- Optional. Sort results by ascending or descending:

- To sort by most recent or oldest workflow item, select the Last event header.

- To sort by most or least amount of time spent in each stage, select the Duration header.

A badge next to the workflow items table header shows the number of workflow items that completed during the selected stage.

The table shows a list of related workflow items for the selected stage. Based on the stage you select, this can be:

- Issues

- Merge requests

Last 30 days is 29 days prior to the current day for a total of 30 days.Data filters

You can filter value stream analytics to view data that matches specific criteria. The following filters are supported:

- Date range

- Project

- Assignee

- Author

- Milestone

- Label

Value stream analytics metrics

The Overview page in value stream analytics displays key metrics of the DevSecOps lifecycle performance for projects and groups.

Lifecycle metrics

Value stream analytics includes the following lifecycle metrics:

- Lead time: Median time from when the issue was created to when it was closed.

- Cycle time: Median time from first commit to issue closed. GitLab measures cycle time from the earliest commit of a linked issue’s merge request to when that issue is closed. The cycle time approach underestimates the lead time because merge request creation is always later than commit time.

- New issues: Number of new issues created.

- Deploys: Total number of deployments to production.

DORA metrics

- Introduced time to restore service tile in GitLab 15.0.

- Introduced change failure rate tile in GitLab 15.0.

Value stream analytics includes the following DORA metrics:

- Deployment frequency

- Lead time for changes

- Time to restore service

- Change failure rate

DORA metrics are calculated based on data from the DORA API.

If you have a GitLab Premium or Ultimate subscription:

- The number of successful deployments is calculated with DORA data.

- The data is filtered based on environment and environment tier.

View lifecycle and DORA metrics

Prerequisites:

- To view deployment metrics, you must have a production environment configured.

To view lifecycle metrics:

- On the left sidebar, select Search or go to and find your project or group.

- Select Analyze > Value stream analytics. Lifecycle metrics display below the Filter results text box.

- Optional. Filter the results:

- Select the Filter results text box. Based on the filter you select, the dashboard automatically aggregates lifecycle metrics and displays the status of the value stream.

- Select a parameter.

- Select a value or enter text to refine the results.

- To adjust the date range:

- In the From field, select a start date.

- In the To field, select an end date.

To view the Value Streams Dashboard and DORA metrics:

- On the left sidebar, select Search or go to and find your project or group.

- Select Analyze > Value stream analytics.

- Below the Filter results text box, in the Lifecycle metrics row, select Value Streams Dashboard / DORA.

- Optional. To open the new page, append this path

/analytics/dashboards/value_streams_dashboardto the group URL (for example,https://gitlab.com/groups/gitlab-org/-/analytics/dashboards/value_streams_dashboard).

View metrics for each development stage

Value stream analytics shows the median time spent by issues or merge requests in each development stage.

To view the median time spent in each stage by a group:

- On the left sidebar, select Search or go to and find your project or group.

- Select Analyze > Value stream analytics.

- Optional. Filter the results:

- Select the Filter results text box.

- Select a parameter.

- Select a value or enter text to refine the results.

- To adjust the date range:

- In the From field, select a start date.

- In the To field, select an end date.

- To view the metrics for each stage, above the Filter results text box, hover over a stage.

View tasks by type

The Tasks by type chart displays the cumulative number of issues and merge requests per day for your group.

The chart uses the global page filters to display data based on the selected group and time frame.

To view tasks by type:

- On the left sidebar, select Search or go to and find your group.

- Select Analyze > Value stream analytics.

- Below the Filter results text box, select Overview. The Tasks by type chart displays below the Total time chart.

- To switch between the task type, select the Settings () dropdown list and select Issues or Merge Requests.

- To add or remove labels, select the Settings () dropdown list and select or search for a label. By default the top group-level labels (maximum 10) are selected. You can select a maximum of 15 labels.

Create a value stream

-

New value stream feature changed from a dialog to a page in GitLab 16.11 with a flag named

vsa_standalone_settings_page. Disabled by default.

vsa_standalone_settings_page. On GitLab.com and GitLab Dedicated, this feature is not available. This feature is not ready for production use.Create a value stream with GitLab default stages

When you create a value stream, you can use GitLab default stages and hide or re-order them. You can also create custom stages in addition to those provided in the default template.

- On the left sidebar, select Search or go to and find your project or group.

- Select Analyze > Value Stream analytics.

- Select New Value Stream.

- Enter a name for the value stream.

- Select Create from default template.

- Customize the default stages:

- To re-order stages, select the up or down arrows.

- To hide a stage, select Hide ().

- To add a custom stage, select Add another stage.

- Enter a name for the stage.

- Select a Start event and a Stop event.

- Select New value stream.

Create a value stream with custom stages

When you create a value stream, you can create and add custom stages that align with your own development workflows.

- On the left sidebar, select Search or go to and find your project or group.

- Select Analyze > Value Stream analytics.

- Select New value stream.

- For each stage:

- Enter a name for the stage.

- Select a Start event and a Stop event.

- To add another stage, select Add another stage.

- To re-order the stages, select the up or down arrows.

- Select New value stream.

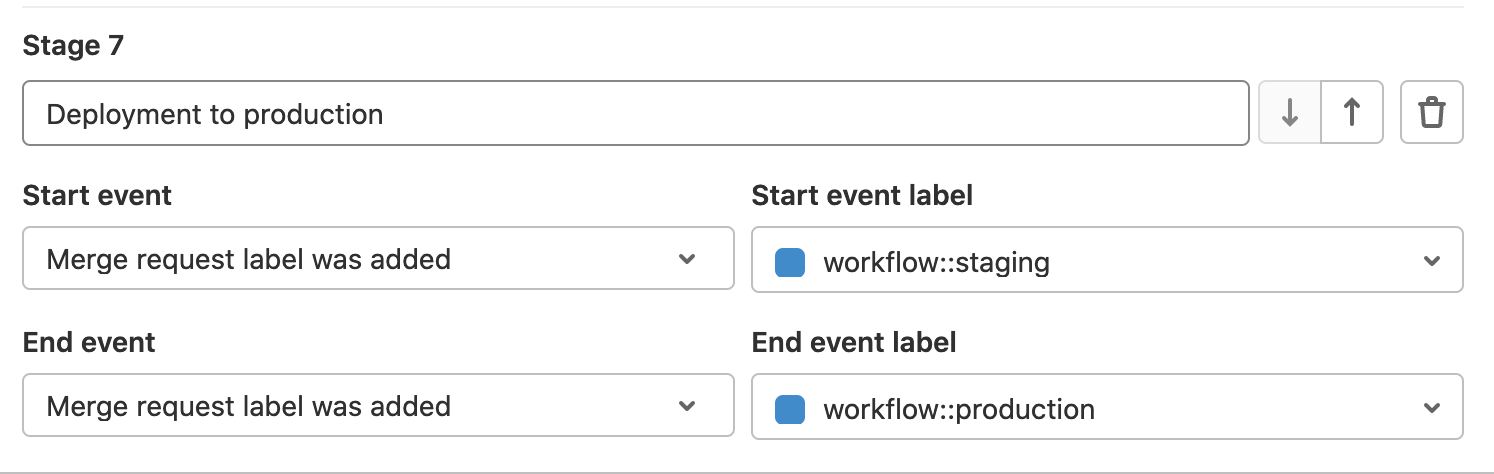

Label-based stages for custom value streams

To measure complex workflows, you can use scoped labels. For example, to measure deployment time from a staging environment to production, you could use the following labels:

- When the code is deployed to staging, the

workflow::staginglabel is added to the merge request. - When the code is deployed to production, the

workflow::productionlabel is added to the merge request.

Automatic data labeling with webhooks

You can automatically add labels by using GitLab webhook events, so that a label is applied to merge requests or issues when a specific event occurs. Then, you can add label-based stages to track your workflow. To learn more about the implementation, see the blog post Applying GitLab Labels Automatically.

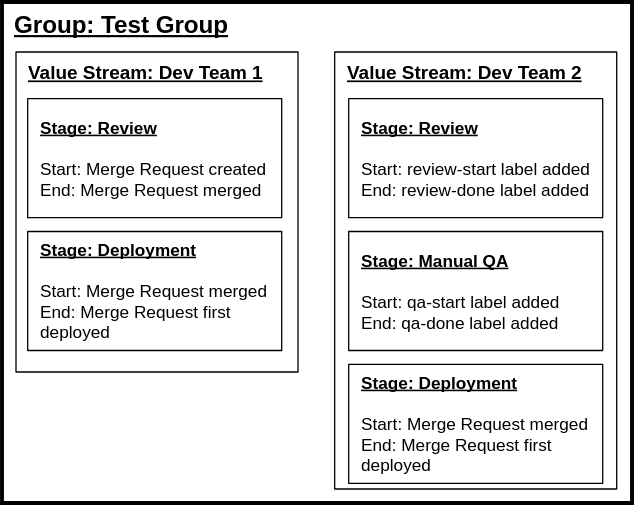

Example for custom value stream configuration

In the example above, two independent value streams are set up for two teams that are using different development workflows in the Test Group (top-level namespace).

The first value stream uses standard timestamp-based events for defining the stages. The second value stream uses label events.

Edit a value stream

-

Edit value stream feature changed from a dialog to a page in GitLab 16.11 with a flag named

vsa_standalone_settings_page. Disabled by default.

vsa_standalone_settings_page. On GitLab.com and GitLab Dedicated, this feature is not available. This feature is not ready for production use.After you create a value stream, you can customize it to suit your purposes. To edit a value stream:

- On the left sidebar, select Search or go to and find your project or group.

- Select Analyze > Value Stream analytics.

- In the upper-right corner, select the dropdown list, then select a value stream.

- Next to the value stream dropdown list, select Edit.

- Optional:

- Rename the value stream.

- Hide or re-order default stages.

- Remove existing custom stages.

- To add new stages, select Add another stage.

- Select the start and end events for the stage.

- Optional. To undo any modifications, select Restore value stream defaults.

- Select Save Value Stream.

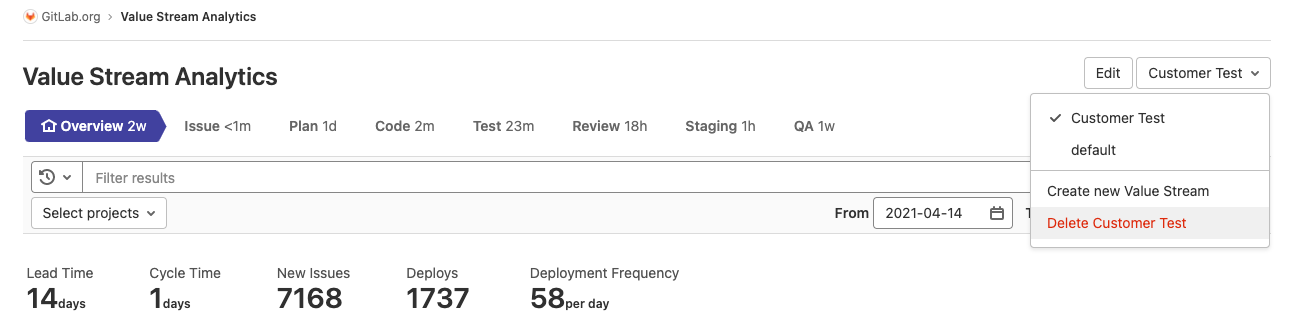

Delete a value stream

To delete a custom value stream:

- On the left sidebar, select Search or go to and find your project or group.

- In the upper-right corner, select the dropdown list, then select the value stream you would like to delete.

- Select Delete (name of value stream).

- To confirm, select Delete.

View number of days for a cycle to complete

The Total time chart shows the average number of days it takes for development cycles to complete. The chart shows data for the last 500 workflow items.

- On the left sidebar, select Search or go to and find your project or group.

- Select Analyze > Value stream analytics.

- Above the Filter results box, select a stage:

- To view a summary of the cycle time for all stages, select Overview.

- To view the cycle time for specific stage, select a stage.

- Optional. Filter the results:

- Select the Filter results text box.

- Select a parameter.

- Select a value or enter text to refine the results.

- To adjust the date range:

- In the From field, select a start date.

- In the To field, select an end date.

Access permissions for value stream analytics

Access permissions for value stream analytics depend on the project type.

| Project type | Permissions |

|---|---|

| Public | Anyone can access. |

| Internal | Any authenticated user can access. |

| Private | Any user with at least the Guest role can access. |

Value Stream Analytics GraphQL API

- Loading stage metrics through GraphQL introduced in GitLab 17.0.

With the VSA GraphQL API, you can request metrics from your configured value streams and value stream stages. This can be useful if you want to export VSA data to an external system or for a report.

The following metrics are available:

- Number of completed items in the stage. The count is limited to a maximum of 10,000 items.

- Median duration for the completed items in the stage.

- Average duration for the completed items in the stage.

Request the metrics

Prerequisites:

- You must have at least the Reporter role.

First, you must determine which value stream you want to use in the reporting.

To request the configured value streams for a group, run:

group(fullPath: "your-group-path") {

valueStreams {

nodes {

id

name

}

}

}

Similarly, to request metrics for a project, run:

project(fullPath: "your-group-path") {

valueStreams {

nodes {

id

name

}

}

}To request metrics for stages of a value stream, run:

group(fullPath: "your-group-path") {

valueStreams(id: "your-value-stream-id") {

nodes {

stages {

id

name

}

}

}

}

Depending how you want to consume the data, you can request metrics for one specific stage or all stages in your value stream.

Requesting metrics for the stage:

group(fullPath: "your-group-path") {

valueStreams(id: "your-value-stream-id") {

nodes {

stages(id: "your-stage-id") {

id

name

metrics(timeframe: { start: "2024-03-01", end: "2024-03-31" }) {

average {

value

unit

}

median {

value

unit

}

count {

value

unit

}

}

}

}

}

}

The metrics node supports additional filtering options:

- Assignee usernames

- Author username

- Label names

- Milestone title

Example request with filters:

group(fullPath: "your-group-path") {

valueStreams(id: "your-value-stream-id") {

nodes {

stages(id: "your-stage-id") {

id

name

metrics(

labelNames: ["backend"],

milestoneTitle: "17.0",

timeframe: { start: "2024-03-01", end: "2024-03-31" }

) {

average {

value

unit

}

median {

value

unit

}

count {

value

unit

}

}

}

}

}

}

Best practices

- To get an accurate view of the current status, request metrics as close to the end of the time frame as possible.

- For periodic reporting, you can create a script and use the scheduled pipelines feature to export the data in a timely manner.

- When invoking the API, you get the current data from the database. Over time, the same metrics might change due to changes in the underlying data in the database. For example, moving or removing a project from the group might affect group-level metrics.

- Re-requesting the metrics for previous periods and comparing them to the previously collected metrics can show skews in the data, which can help in discovering and explaining changing trends.

Troubleshooting

100% CPU utilization by Sidekiq cronjob:analytics_cycle_analytics

It is possible that value stream analytics background jobs strongly impact performance by monopolizing CPU resources.

To recover from this situation:

-

Disable the feature for all projects in the Rails console, and remove existing jobs:

Project.find_each do |p| p.analytics_access_level='disabled'; p.save! end Analytics::CycleAnalytics::GroupStage.delete_all Analytics::CycleAnalytics::Aggregation.delete_all - Configure a Sidekiq routing

with for example a single

feature_category=value_stream_managementand multiplefeature_category!=value_stream_managemententries. Find other relevant queue metadata in the Enterprise Edition list. - Enable value stream analytics for one project after another. You might need to tweak the Sidekiq routing further according to your performance requirements.